Hi everyone (if someone’s there). There’s something magical about teaching computers to understand not just our words, but our intent. I recently made a chatbot with Google’s Gemini 2.0 Flash model - and the best part? You can create it too. Let me walk you through this project that blend cutting-edge AI with practical applications.

1. The Spreadsheet Whisperer

Behind the Curtain

This chatbot project came from a simple question: “Why can’t I just make a chatbot to ask questions about any sort of data I give it and ask questions about it?” Here’s how it works:

# The brain of the operation

llm = Ollama(model="llama3.2-vision")

embed_model = HuggingFaceEmbedding(model_name="BAAI/bge-large-en-v1.5")

# Custom prompt engineering

qa_prompt = (

"Context information:\n{context_str}\n"

"Answer like a data analyst friend: {query_str}\n"

"If unsure, just say so - no guessing!"

)

The magic happens in three layers:

Document Alchemy: Converts tables into searchable knowledge using DoclingReader

Contextual Understanding: BAAI embeddings create “mental maps” of data relationships

Conversational Interface: Streamlit provides the chat interface we all recognize

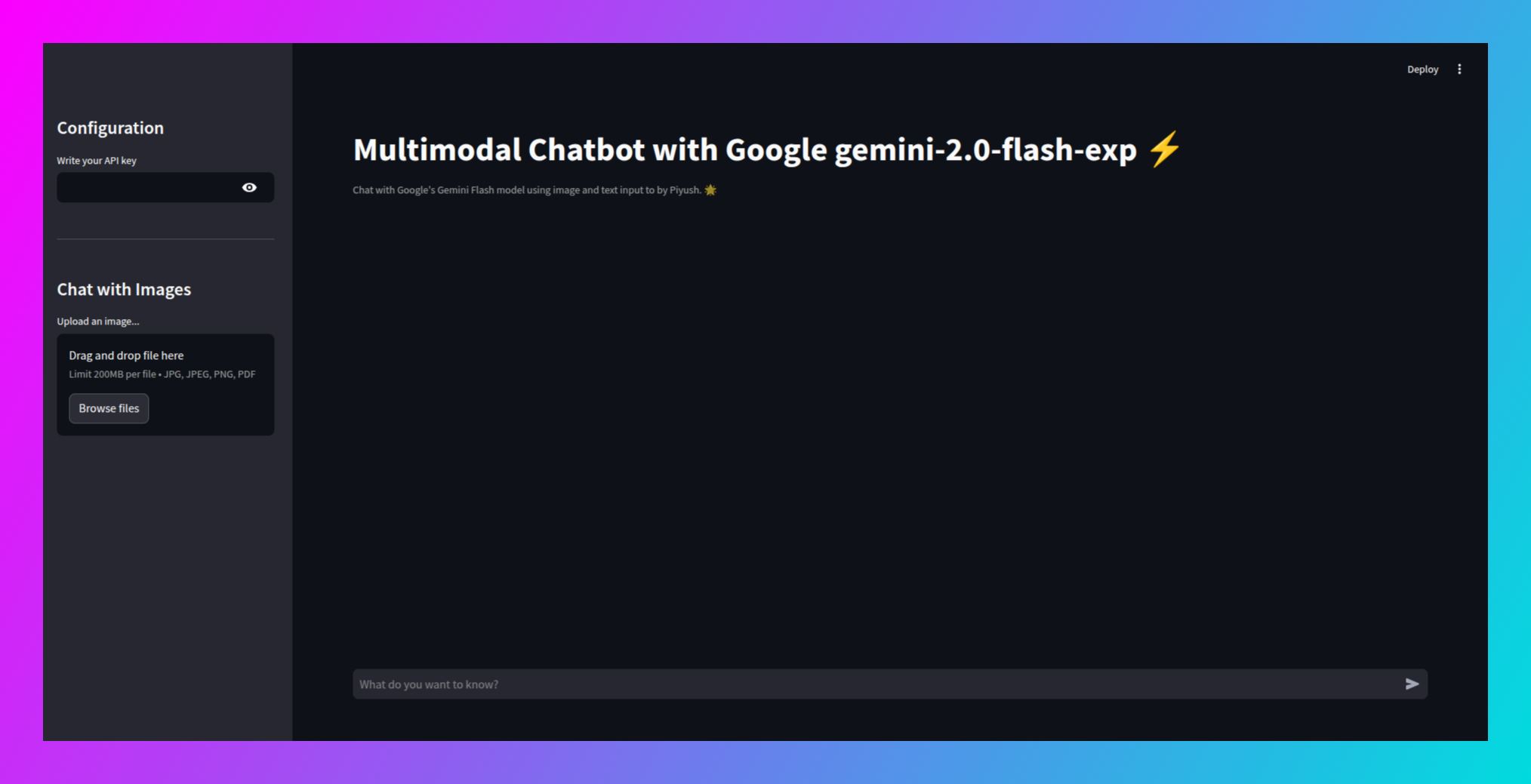

2. Seeing Through AI’s Eyes: Multimodal Chat

When Words Meet Images

This project answers: “What if I could show pictures AND ask questions?” Using Google’s Gemini 2.0 Flash model:

def initialize_gemini():

# Secure API handling

genai.configure(api_key=decoded_key)

return genai.GenerativeModel("gemini-2.0-flash-exp")

# Handling multimodal input

if uploaded_file:

inputs.append(Image.open(uploaded_file))

response = model.generate_content(inputs)

This implementation does something remarkable:

Seamless blending of visual and textual understanding

Context-aware responses that reference image content

Streaming interface that feels truly conversational

I particularly love how it handles follow-up questions about previously uploaded images.

Making It Work: A Developer’s Journey

Prerequisites

- Python 3.10+ environment

- Ollama installed and running

- 8GB+ RAM (16GB recommended)

Installation Steps

- Clone the repository:

git clone https://github.com/piktx/flash-mutimodal-chatbot.git

cd flash-mutimodal-chatbot

- Install dependencies:

pip install -r requirements.txt

- Start Ollama service:

ollama serve

- Launch the Streamlit app:

streamlit run app.py

The interface will automatically open in your default browser at localhost:8501.

Final Thoughts

This project demonstrate how accessible AI has become. Whether you’re:

A business user tired of pivot tables or

A developer exploring multimodal AI or

An AI enthusiast curious about real applications

There’s never been a better time to experiment.

Last but not the least, If you liked this project, don’t forget to give it a star on Github